|

NarrativeLoom: Enhancing Creative Storytelling through Multi-Persona Collaborative Improvisation

Yuxi Ma*,

Yongqian Peng*,

Fengyuan Yang,

Siyu Zha,

Chi Zhang,

Zixia Jia,

Zilong Zheng✉️,

Yixin Zhu✉️

In CHI, 2026

[Abs]

[Paper]

[Project]

[Video]

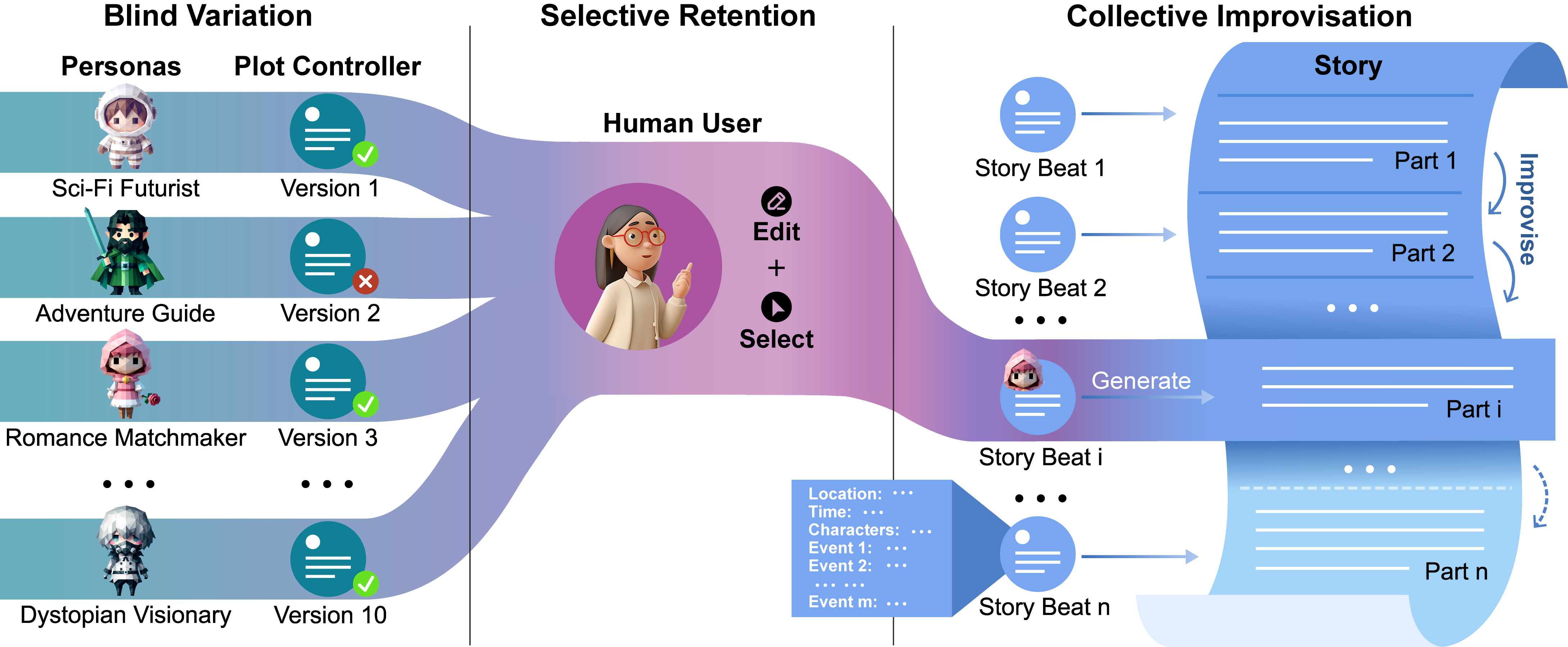

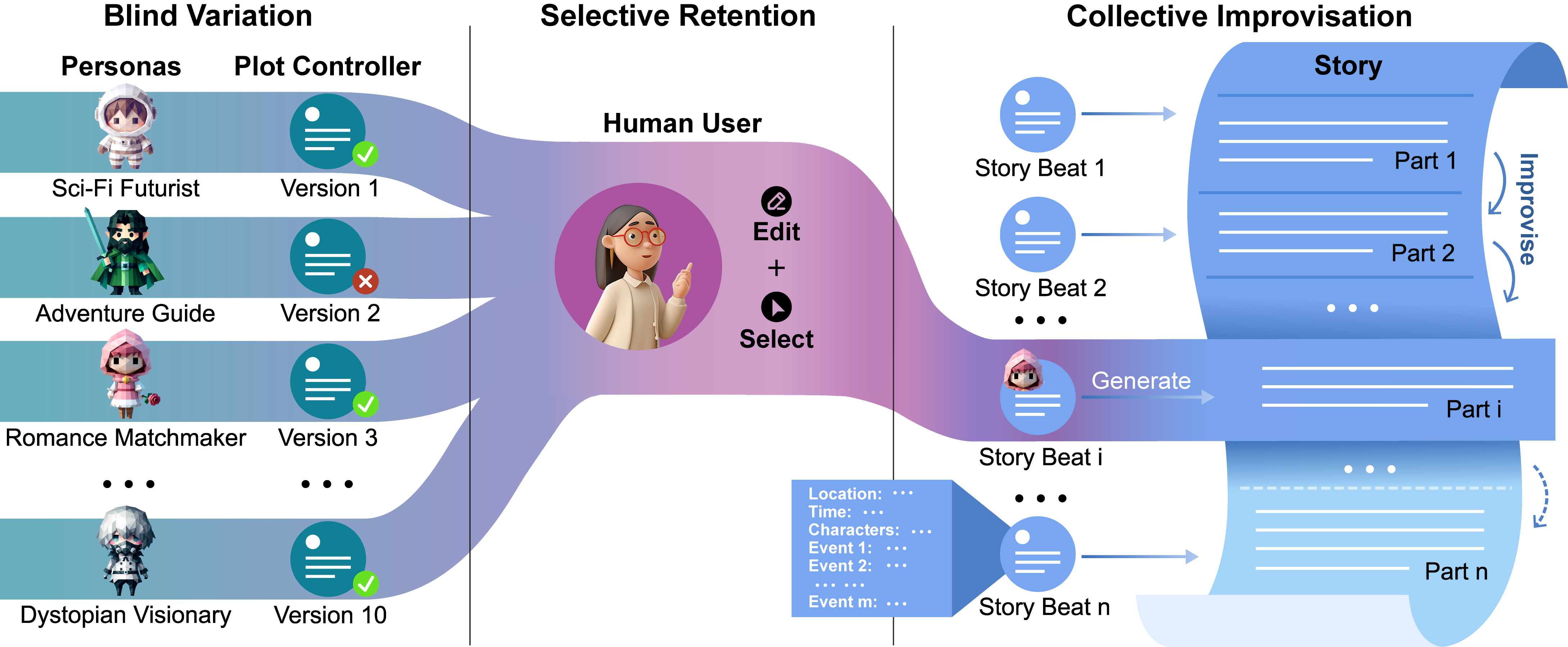

Storytelling, a cornerstone of human culture, thrives on creativity—a domain where modern AI systems often falter. While these systems excel at maintaining narrative coherence, they frequently produce technically sound yet predictable narratives. To address this gap, we introduce Improviser, a collaborative system grounded in psychological theories of creativity, specifically the BVSR framework. IMPROVISER leverages multiple AI personas, each embodying distinct narrative styles, to generate diverse story variations. Users iteratively refine these ideas through selective retention, balancing novelty, coherence, and personalization. In controlled experiments, Improviser outperformed single-persona and chatbot-based systems on creativity and diversity, producing longer, more spatially rich stories without compromising readability. These results empirically validate BVSR's role in computational creativity and establish a framework for human-AI co-creation, demonstrating how AI can amplify—rather than replace—human creative potential.

tl;dr: We introduce NarrativeLoom, a BVSR-inspired multi-persona co-creative system that enables writers to explore diverse narrative possibilities while retaining human creative control, resulting in significantly more creative stories than single-voice AI tools.

|

|

Probing and Inducing Combinational Creativity in Vision-Language Models

Yongqian Peng*,

Yuxi Ma*,

Mengmeng Wang,

Yuxuan Wang,

Yizhou Wang,

Chi Zhang,

Yixin Zhu✉️,

Zilong Zheng✉️

In CogSci, 2025 (Talk)

[Abs]

[Paper]

[Slides]

[arXiv]

[Project]

[Code]

[Data]

[Video]

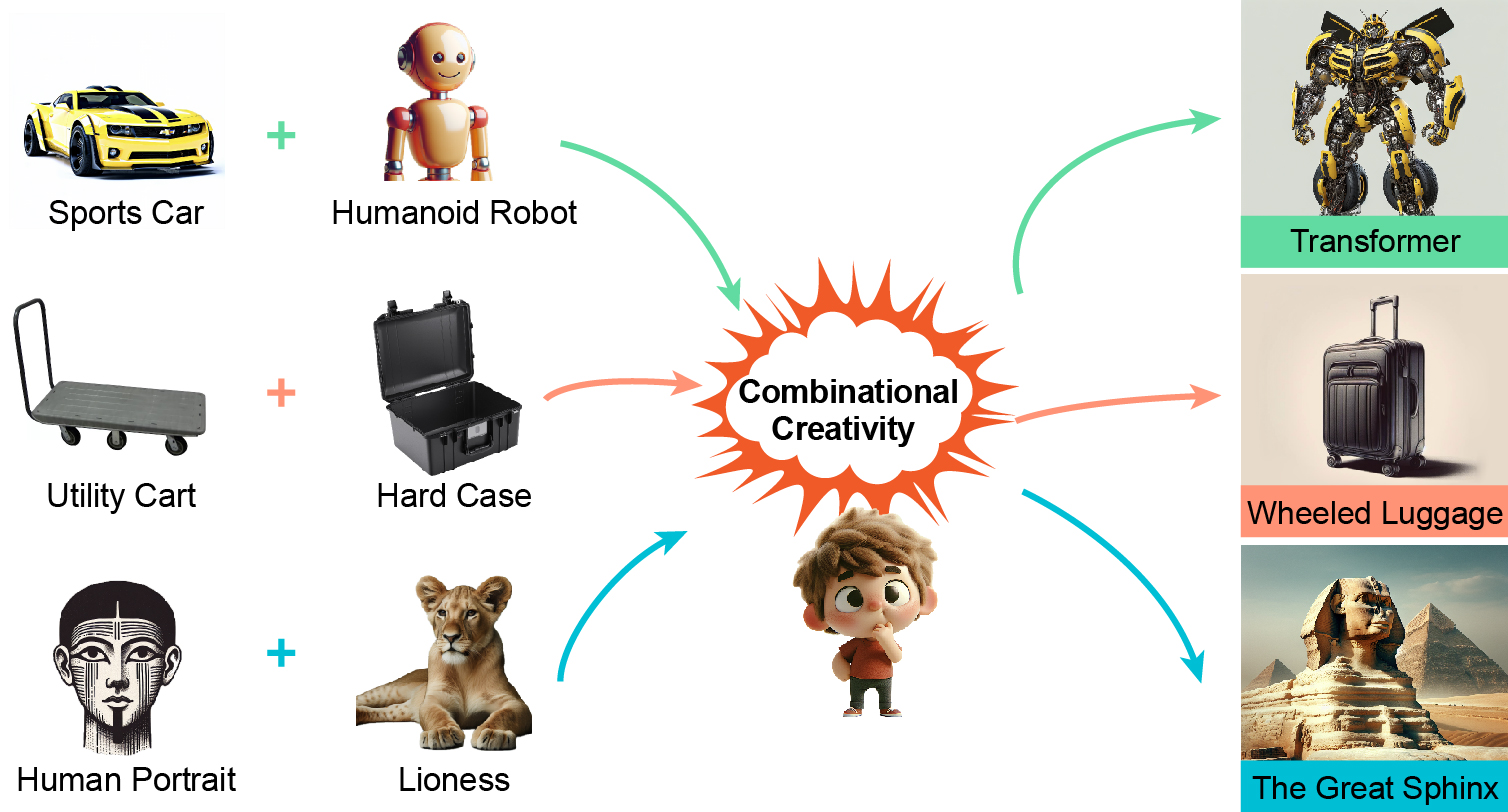

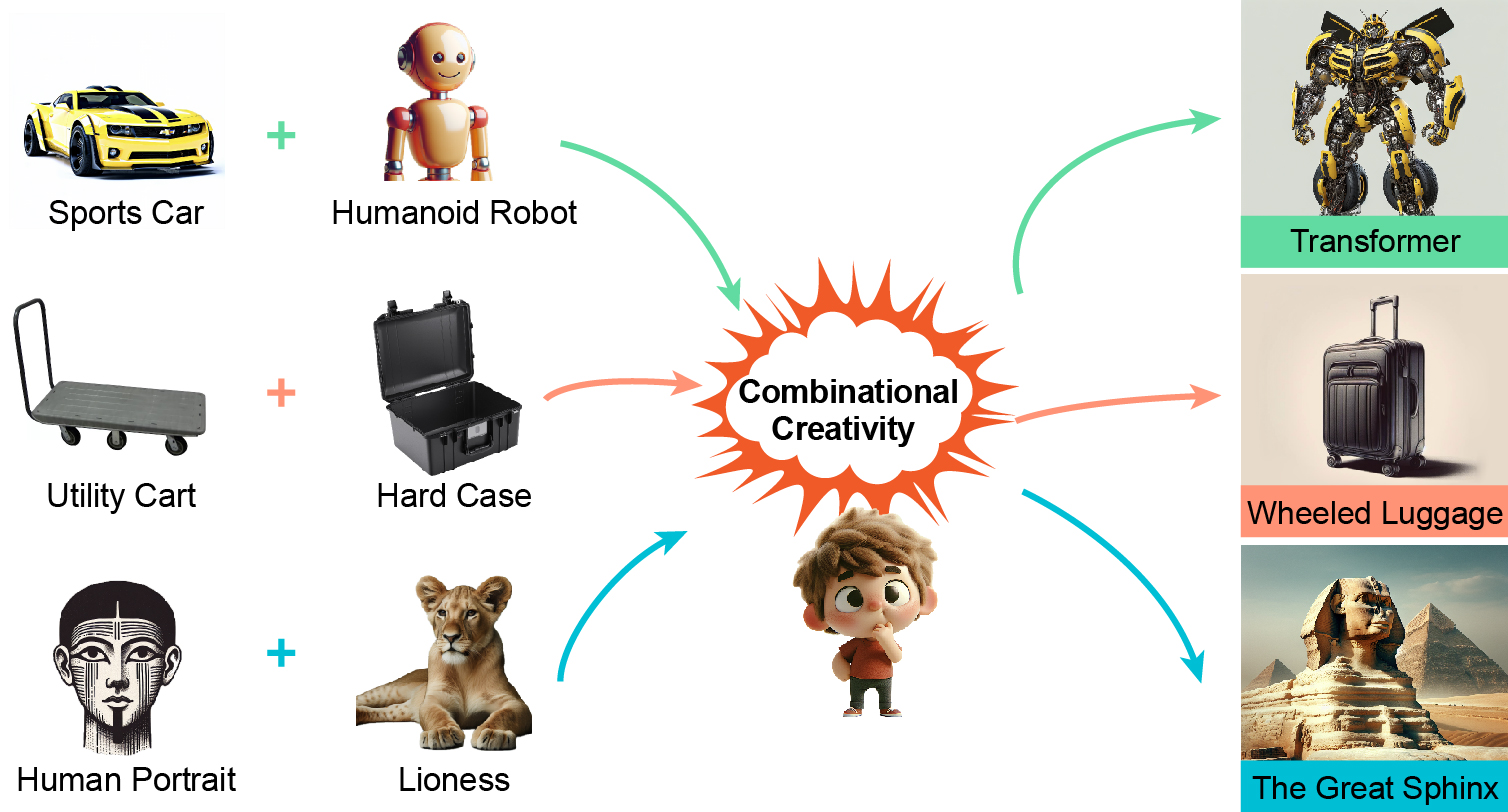

The ability to combine existing concepts into novel ideas stands as a fundamental hallmark of human intelligence. Recent advances in Vision-Language Models (VLMs) like GPT-4V and DALLE-3 have sparked debate about whether their outputs reflect combinational creativity—defined by M. A. Boden (1998) as synthesizing novel ideas through combining existing concepts—or sophisticated pattern matching of training data. Drawing inspiration from cognitive science, we investigate the combinational creativity of VLMs from the lens of concept blending. We propose the Identification-Explanation-Implication (IEI) framework, which decomposes creative processes into three levels: identifying input spaces, extracting shared attributes, and deriving novel semantic implications. To validate this framework, we curate CreativeMashup, a high-quality dataset of 666 artist-generated visual mashups annotated according to the IEI framework. Through extensive experiments, we demonstrate that in comprehension tasks, best VLMs have surpassed average human performance while falling short of expert-level understanding; in generation tasks, incorporating our IEI framework into the generation pipeline significantly enhances the creative quality of VLMs outputs. Our findings establish both a theoretical foundation for evaluating artificial creativity and practical guidelines for improving creative generation in VLMs.

tl;dr: We systematically investigate the combinational creativity of VLMs through the lens of conceptual blending, proposing a novel framework and dataset to evaluate their comprehension and enhance their creative generation capabilities.

|

|

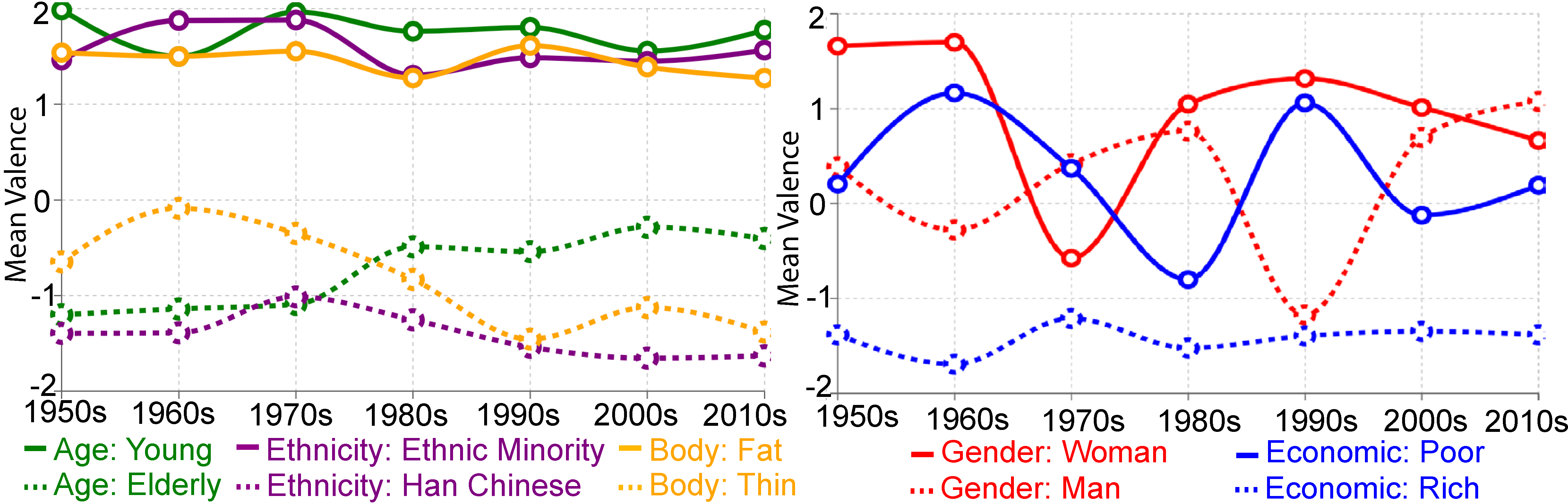

Word Embeddings Track Social Group Changes Across 70 Years in China

Yuxi Ma,

Yongqian Peng,

Yixin Zhu✉️

In CogSci, 2025

[Abs]

[Paper]

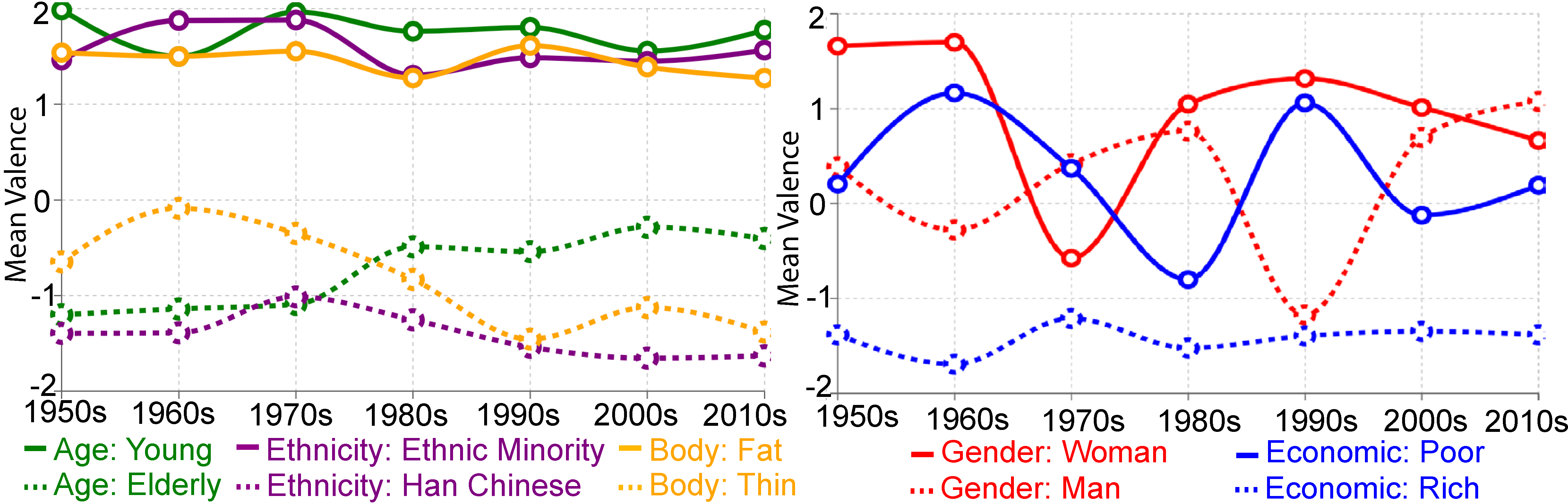

Language encodes societal beliefs about social groups through word patterns. While computational methods like word embeddings enable quantitative analysis of these patterns, studies have primarily examined gradual shifts in Western contexts. We present the first large-scale computational analysis of Chinese state-controlled media (1950-2019) to examine how revolutionary social transformations are reflected in official linguistic representations of social groups. Using diachronic word embeddings at multiple temporal resolutions, we find that Chinese representations differ significantly from Western counterparts, particularly regarding economic status, ethnicity, and gender. These representations show distinct evolutionary dynamics: while stereotypes of ethnicity, age, and body type remain remarkably stable across political upheavals, representations of gender and economic classes undergo dramatic shifts tracking historical transformations. This work advances our understanding of how officially sanctioned discourse encodes social structure through language while highlighting the importance of non-Western perspectives in computational social science.

tl;dr: Using 70 years of Chinese official text, we show that some social stereotypes remain remarkably stable, while gender and class representations are rapidly rewritten during political and economic upheavals—revealing language as both a mirror of society and a site of power.

|

|

Cognitive Reasoning (CoRe) Lab, Institute for Artificial Intelligence, Peking University, China

July 2023 - Present

Student Researcher

Advisor: Prof. Yixin Zhu

|

|

Peking University, China

Aug 2021 - Present

Undergraduate Student

|

|